How Semantic Perception and ToF Point Clouds Improve Robot Navigation

How Do Semantic Understanding and ToF Point Clouds Help Mobile Robots Navigate Complex Environments?

As mobile robots rapidly expand across smart factories, autonomous logistics, service robotics, and autonomous driving, advanced environmental perception has become a decisive factor in navigation intelligence. Among the most critical technologies, semantic perception, 3D point cloud detection, and TOF (Time-of-Flight) depth sensing are redefining how robots understand and interact with complex environments.

By combining ToF point cloud sensing with semantic understanding algorithms, mobile robots can achieve accurate localization, intelligent obstacle recognition, and adaptive path planning in dynamic indoor and outdoor scenarios. This fusion significantly improves navigation reliability, decision-making accuracy, and operational efficiency across industrial automation, warehouse robotics, service robots, and autonomous vehicles.

What Is a Time-of-Flight (ToF) Sensor?

A Time-of-Flight (ToF) sensor is a depth-sensing technology that measures the distance between the sensor and surrounding objects by calculating the time it takes for emitted light pulses to travel to an object and return. Using the known speed of light, the sensor converts this flight time into precise depth measurements.

ToF sensors are widely used in mobile robot navigation, 3D perception, and environment modeling, enabling robots to perceive spatial structure in real time. Compared with traditional vision-only systems, ToF provides robust depth data even in low-light, low-texture, or high-reflectivity environments.

How ToF Sensors Work

-

Emit Light Pulses: The sensor emits infrared or laser pulses into the environment.

-

Receive Reflected Signals: Light reflects off objects and returns to the sensor.

-

Calculate Time-of-Flight: The round-trip time is measured precisely.

-

Generate Depth Maps and Point Clouds: Distance data is aggregated into high-resolution 3D depth maps and point cloud models for navigation and perception.

1. Fundamentals of Point Cloud Detection and ToF Technology

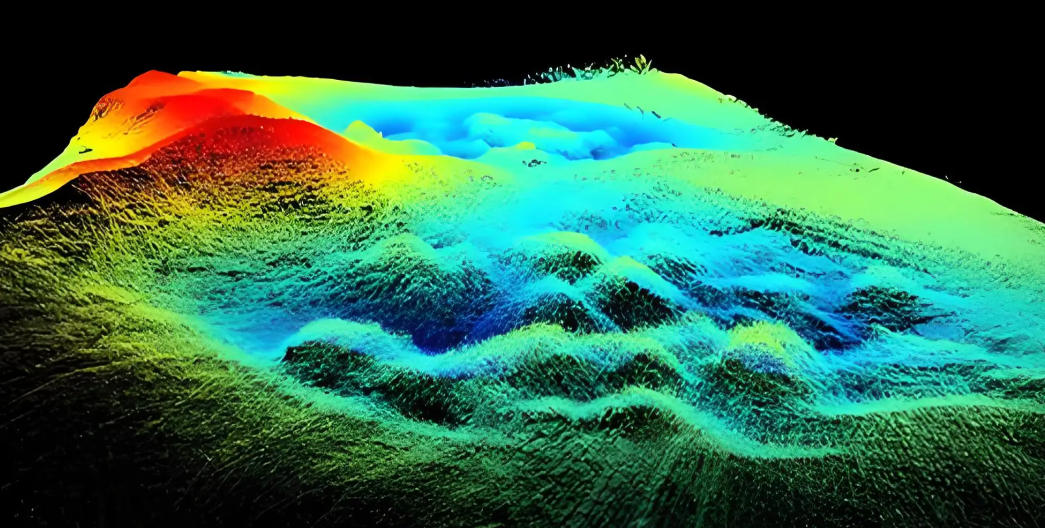

In modern autonomous mobile robots (AMRs), AGVs, autonomous vehicles, and service robots, point cloud detection is a foundational perception technology. Using ToF sensors, LiDAR, or RGB-D cameras, robots capture dense 3D spatial data to understand object geometry, distance, and layout.

1.1 Point Cloud Detection Explained

A point cloud is a collection of 3D data points representing the surfaces of objects in the environment. Each point encodes spatial coordinates, and together they form a detailed 3D representation of surroundings.

Key Functions of Point Cloud Detection

Obstacle Detection and Collision Avoidance

Point clouds allow robots to detect walls, shelves, machinery, pedestrians, vehicles, and other robots. Integrated with navigation algorithms, robots can perform real-time obstacle avoidance and safe route planning.

3D Environment Mapping and Navigation Support

Point cloud data feeds into SLAM (Simultaneous Localization and Mapping) systems, enabling robots to build accurate 3D maps for indoor and outdoor navigation.

Dynamic Environment Monitoring

Point cloud tracking enables robots to detect moving objects and environmental changes, improving safety and operational efficiency in warehouses, factories, and outdoor environments.

Advantages of ToF Sensors for Point Cloud Detection

-

High-Precision Depth Measurement: Millimeter-level accuracy, even on reflective or low-texture surfaces.

-

Low Latency and Real-Time Performance: Suitable for fast-moving robots and dynamic obstacle detection.

-

Lower Computational Load: Compared to traditional LiDAR, ToF data is more compact and efficient.

-

Indoor–Outdoor Versatility: Reliable depth perception across warehouses, campuses, streets, and industrial sites.

2. Challenges of Point Cloud-Based Navigation

Despite its strengths, point cloud detection alone has limitations that restrict intelligent robot behavior.

2.1 Lack of Semantic Understanding

Point clouds primarily provide geometric data—shape, size, and position—without understanding object meaning. For example, a robot may detect a vertical plane but cannot distinguish whether it is a wall, door, or glass panel.

This limitation reduces navigation intelligence in smart warehouses, service robots, and autonomous driving scenarios.

2.2 Environmental Sensitivity

Outdoor lighting changes, rain, fog, snow, or reflective surfaces can introduce noise into point cloud data. Dynamic environments with moving people or vehicles require rapid updates to maintain navigation accuracy.

2.3 Computational Complexity

High-density point clouds require filtering, segmentation, registration, and feature extraction before use. Achieving real-time performance often demands GPU acceleration, AI chips, or edge computing platforms.

2.4 Sensor Fusion Requirements

Single-sensor perception is insufficient for complex environments. Effective navigation relies on fusing ToF, LiDAR, RGB cameras, and IMU data to enhance robustness and reliability.

3. Trends in Point Cloud and Semantic Optimization

ToF + Point Cloud + Semantic Fusion

Deep learning-based semantic segmentation of point clouds enables robots to recognize object categories and functional attributes, transforming raw geometry into meaningful environmental understanding.

Dynamic Environment Adaptation

Fusing ToF depth data with visual perception allows robots to detect and predict pedestrian or vehicle motion, improving outdoor navigation safety.

Edge Computing and AI Acceleration

Deploying AI accelerators enables real-time point cloud processing and semantic inference, supporting millisecond-level response times.

II. Integrating Semantic Understanding with ToF Sensors

Semantic understanding enables robots to go beyond sensing geometry to interpreting meaning. When combined with ToF depth sensing, robots achieve a higher level of autonomous intelligence.

1. Role of Semantic Perception

Object Recognition and Classification

Robots identify objects such as shelves, doors, equipment, or people and understand their functions.

Scene Understanding and Traversability Analysis

Semantic perception distinguishes navigable areas from obstacles and identifies stairs, ramps, or doors for optimal path planning.

Intelligent Task Planning

Robots adjust routes, prioritize tasks, and collaborate with other robots based on semantic context.

2. How ToF Enhances Semantic Understanding

-

Accurate 3D Spatial Context for semantic segmentation

-

Stable Depth Perception in Low-Light Conditions

-

Real-Time Tracking of Dynamic Obstacles

-

Robust Multi-Sensor Fusion for SLAM and Navigation

3. Application Scenarios

Smart Warehouse Robots

ToF point clouds combined with semantic recognition enable precise picking, stacking, and autonomous transport.

Service Robots

Robots navigate crowded public spaces using semantic understanding to recognize people and obstacles.

Autonomous Driving and Outdoor Robots

ToF sensors detect pedestrians and vehicles, while semantic perception interprets road structures and traffic elements.

Industrial Inspection Robots

Robots identify equipment, pipelines, and hazardous areas for automated inspection.

Conclusion

The integration of ToF point cloud sensing and semantic understanding represents a major advancement in mobile robot navigation. By combining accurate depth perception, intelligent object recognition, and real-time decision-making, robots can navigate complex indoor and outdoor environments safely and efficiently.

As these technologies mature, they will continue to drive innovation in autonomous vehicles, smart logistics, industrial automation, and service robotics—bringing truly intelligent autonomous navigation closer to reality.

IHawk Structured Light Camera P100R 8.0M 1000x Polarization Extinction Ratio

After-sales Service: Our professional technical support team specializes in TOF camera technology and is always ready to assist you. If you encounter any issues during the usage of your product after purchase or have any questions about TOF technology, feel free to contact us at any time. We are committed to providing high-quality after-sales service to ensure a smooth and worry-free user experience, allowing you to feel confident and satisfied both with your purchase and during product use.

Please upload banner from store admin blog pages

Please select collection from store admin blog pages